Data centers are power-hungry beasts. They currently gobble up about 1-2% of global electricity, and experts warn this could skyrocket to 8-13% by 2030 if we don’t get smarter about how we run them.

The good news? Implementing data center energy efficiency best practices can dramatically cut both your energy bills and environmental footprint while keeping performance strong. Let’s dive into what the industry’s top players are doing to maximize efficiency.

Understanding Data Center Energy Consumption Patterns

Where does all that power go in a data center? The breakdown might surprise you.

Cooling systems typically swallow about 40% of total energy consumption, according to McKinsey’s 2024 research. IT equipment accounts for roughly 50%, while power distribution, lighting, and other systems make up the rest.

Most facilities track Power Usage Effectiveness (PUE) as their north star metric. It’s simple: total facility energy divided by IT equipment energy. The ideal PUE is 1.0, but the industry average sits around 1.55.

Leading hyperscalers have pushed this down to 1.2 through rigorous optimization. That’s impressive progress considering the average PUE was about 2.5 just a decade ago!

Now that we understand where the energy goes, let’s explore actionable strategies to minimize consumption while maximizing performance. Each percentage point of improvement adds up to significant savings at scale.

1. Upgrade to Advanced Cooling Technologies

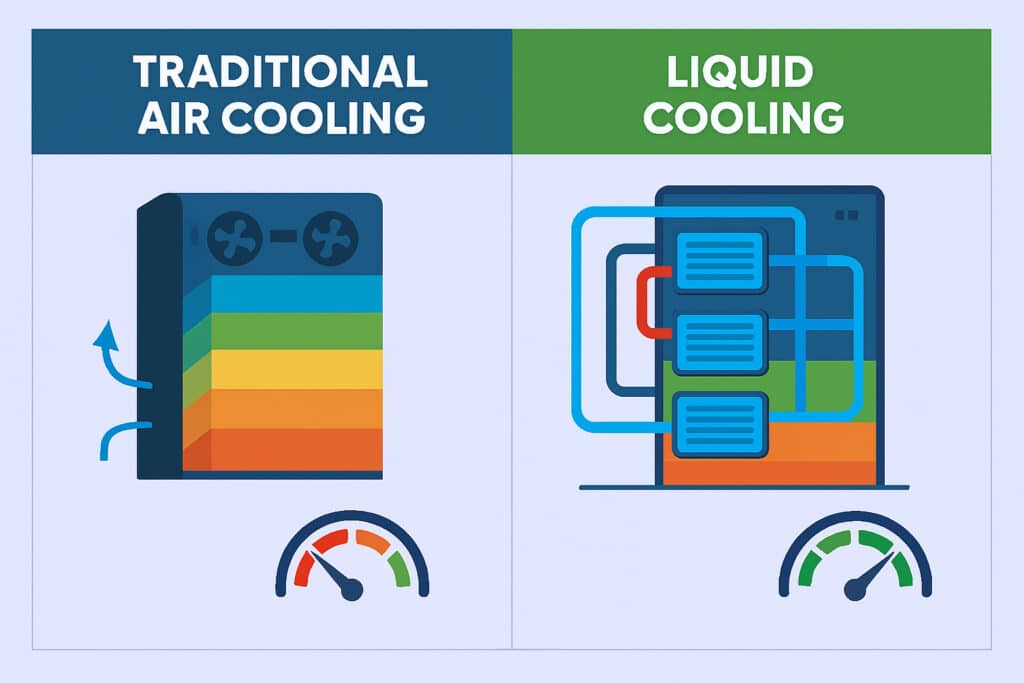

Cooling is your biggest efficiency opportunity. Traditional air cooling works, but newer methods can deliver dramatic energy savings.

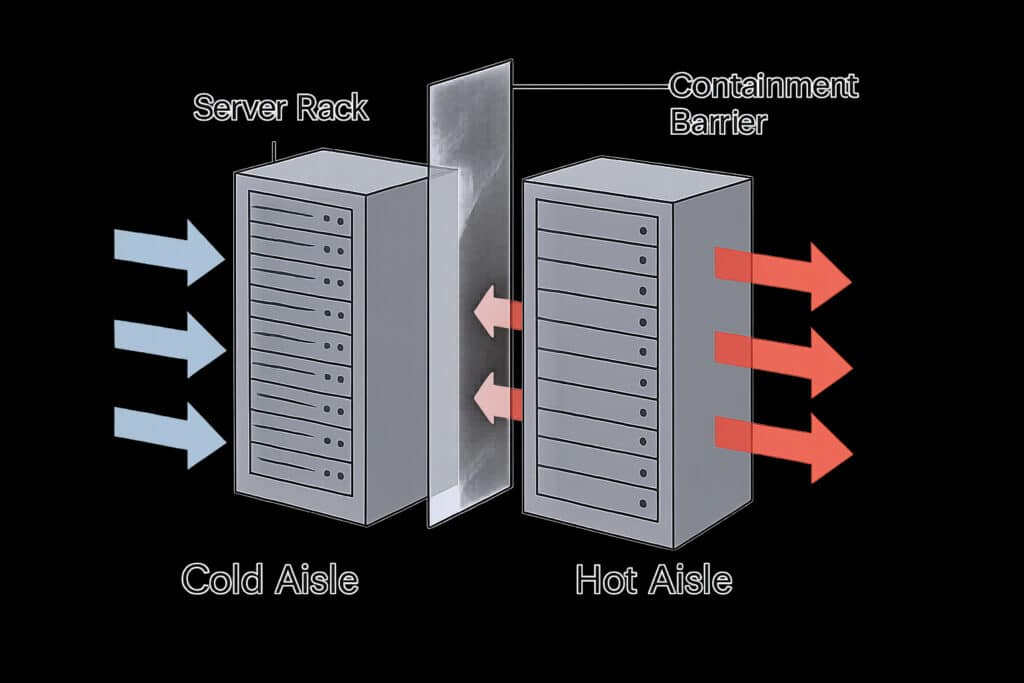

Hot and cold aisle containment is a fundamental win. By physically separating hot exhaust air from cold supply air, you prevent mixing and can reduce cooling capacity by up to 40%.

Liquid cooling technologies are game-changers, especially as computing densities increase. Water and other coolants transfer heat 50-1,000 times more effectively than air.

Three liquid cooling approaches dominate the field:

- Direct-to-chip cooling that circulates liquid directly to CPUs and GPUs

- Rear-door heat exchangers that capture heat as it exits racks

- Immersion cooling where servers take a bath in non-conductive fluid

Free cooling (economization) is another smart play. Using cool outside air or water sources can slash mechanical cooling needs, especially in cooler climates.

The cooling evolution continues rapidly, with hybrid approaches gaining traction. These systems combine multiple cooling technologies, automatically selecting the most efficient option based on current conditions and workloads.

2. Optimize Your Power Distribution System

Once you’ve tackled cooling, it’s time to examine how power moves through your facility. Modern UPS systems achieve up to 95-98% efficiency in online mode compared to older transformer-based models that operated at 80-85% efficiency. That difference adds up fast!

Right-sizing is crucial. Oversized power systems waste energy by operating at suboptimal loads. Implementing scalable power architecture that grows with your needs avoids this inefficiency trap.

High-voltage distribution (415V AC or 380V DC) cuts transformation steps and the associated losses. By bringing higher voltages closer to the rack, you minimize conversion inefficiencies. Industry leaders typically see 2-3% gains here.

Power monitoring at granular levels enables targeted optimization. Modern power distribution units (PDUs) provide rack-level or even outlet-level visibility, helping identify energy hogs and optimization opportunities you might otherwise miss.

3. Virtualize and Consolidate Your Server Infrastructure

With cooling and power distribution optimized, turn your attention to the IT equipment itself. Virtualization is a powerful efficiency strategy. By consolidating workloads onto fewer physical servers, you can dramatically improve utilization rates while maintaining performance.

Traditional data centers often run servers at just 10-15% utilization. Through virtualization and consolidation, major organizations like Citigroup have improved utilization to around 50%, delivering immediate energy savings while reducing facility requirements.

Containerization takes this further by providing lightweight virtualization. Containers use fewer resources than traditional VMs, enabling higher densities. Tools like Kubernetes can automatically optimize placement for maximum efficiency.

Workload scheduling adds another layer of optimization. By intelligently distributing tasks across your infrastructure based on efficiency metrics, you can concentrate work on your most efficient systems and potentially power down underutilized resources during off-peak periods.

4. Implement Strategic Airflow Management

Once you’ve virtualized and consolidated, focus on airflow management. This is often the most cost-effective efficiency strategy, ensuring cooling resources go exactly where needed.

Containment systems physically separate hot and cold air streams, preventing mixing that reduces cooling effectiveness. Whether you choose hot-aisle or cold-aisle containment, recent studies show impressive results—a November 2024 report from Encor Advisors found that hot aisle containment can improve cooling efficiency by over 30% and reduce cooling costs by 20-40% These energy savings quickly offset the initial implementation costs.

Don’t overlook the simple stuff. Blanking panels in empty rack spaces, cable opening seals, and managing under-floor obstructions make a surprising difference in cooling effectiveness.

Temperature and airflow monitoring tools provide the data you need to optimize. Deploy sensors throughout your facility to identify hotspots, evaluate airflow patterns, and fine-tune your cooling parameters.

Remember, airflow management is an ongoing process, not a one-time fix. Regular maintenance checks to ensure containment systems remain intact and adjustments to accommodate changing IT loads are essential for sustained efficiency.

5. Conduct Regular Energy Audits and Monitor Performance

With the major systems optimized, establish a rigorous monitoring regime. Regular energy audits serve as essential check-ups for your data center and can deliver significant returns. According to Sunbird DCIM, simply “raising temperatures can potentially save 4%-5% in energy costs for every 1°F increase in server inlet temperature.” Don’t stop at periodic audits. Continuous monitoring provides real-time insights into PUE, cooling efficiency, and server utilization. This visibility helps you catch and fix efficiency problems quickly.

Data center infrastructure management (DCIM) solutions give you a command center for efficiency. These platforms typically deliver 10-15% improvements by coordinating IT and facility systems.

Advanced tools like thermal imaging and computational fluid dynamics (CFD) modeling provide deeper insights into cooling effectiveness. They reveal thermal issues invisible to conventional monitoring.

Consider automated anomaly detection systems that alert you to efficiency deviations before they become major issues. Machine learning algorithms can establish baseline performance patterns and flag unusual changes that might indicate developing problems.

6. Integrate Renewable Energy Sources

Monitoring will reveal optimization opportunities, but you can go further by changing your energy sources. The future of data center development increasingly depends on selecting energy campus partners who can deliver comprehensive renewable energy solutions from the ground up.

Forward-thinking hyperscalers and data center developers are moving beyond traditional grid-dependent facilities toward energy campuses that integrate renewable generation directly into their infrastructure planning. On-site generation through solar or wind directly reduces grid electricity consumption and carbon emissions while providing the energy security and scalability that modern AI and high-performance computing demands require.

Power Purchase Agreements (PPAs) are another popular approach. These long-term contracts support renewable development while securing predictable energy costs, even when on-site generation isn’t practical. However, the most strategic operators are partnering with energy campus developers who can deliver turnkey renewable solutions that eliminate the complexity of managing multiple vendors and ensure optimal integration from day one.

Energy storage systems help address the intermittency challenge. Battery installations allow you to store excess renewable energy for use during peak demand or when renewable sources aren’t producing. Advanced energy campus developments integrate storage planning into the initial site design, maximizing efficiency and reducing long-term operational complexity.

Microgrids represent the next evolution, combining on-site generation, storage, and intelligent control systems. These self-contained energy networks can operate independently when needed, enhancing reliability while maximizing renewable utilization. The most successful implementations require deep expertise in both renewable energy development and data center infrastructure—capabilities that specialized energy campus developers bring together under one roof.

7. Select and Manage Efficient IT Equipment

With energy sources optimized, focus on the equipment consuming that energy. Hardware selection drives overall efficiency. The most efficient servers can deliver the same computational output while consuming 30-40% less power than less efficient alternatives.

Don’t ignore power management features built into modern equipment. Dynamic voltage scaling, low-power states, and intelligent workload management typically deliver 10-15% energy savings with minimal performance impact.

Smart hardware refresh strategies balance energy efficiency with financial considerations. As equipment ages, it becomes less efficient relative to newer technology. Disciplined refresh cycles ensure your data center runs with optimally efficient hardware.

When evaluating new equipment, look beyond the purchase price to total cost of ownership (TCO). Higher upfront costs for more efficient equipment often pay for themselves through reduced energy consumption over the equipment’s lifetime.

8. Implement Data-Driven Temperature Management

With efficient equipment in place, optimize your environmental settings. Temperature setpoints offer a major efficiency opportunity. Traditional data centers ran unnecessarily cool (around 65°F/18°C). Modern equipment can safely operate much warmer, with ASHRAE guidelines now recommending inlet temperatures up to 80.6°F (27°C).

Each degree increase in temperature typically reduces cooling energy by 2-4%. Leading operators have methodically raised their setpoints while carefully monitoring equipment performance.

Dynamic temperature management systems take this further by adjusting cooling based on real-time conditions. The most advanced implementations use machine learning to predict cooling needs and proactively adjust for maximum efficiency.

Humidity control presents another optimization opportunity. Older data centers maintained tight humidity ranges (40-55% relative humidity), requiring energy-intensive humidification and dehumidification. Modern guidelines allow much wider ranges (20-80%), dramatically reducing the energy needed for humidity control.

9. Optimize Building Design and Infrastructure

With systems and settings optimized, consider your physical infrastructure. Location matters more than you might think. Selecting sites with favorable climates enables greater use of free cooling, reducing mechanical cooling requirements during portions of the year.

Building envelope design affects thermal performance. High-performance insulation, reflective roofing, and strategic orientation minimize heat transfer between your facility and the environment.

Modular and scalable design prevents the inefficiencies of underutilized infrastructure. Rather than building full capacity initially, implement phased deployments that match actual requirements while maintaining the ability to grow.

Consider heat reuse opportunities that capture and repurpose waste heat from your IT equipment. District heating systems, preheating water, or even greenhouse operations can transform what would be wasted energy into a valuable resource. This approach doesn’t reduce your PUE (it might actually increase it), but it significantly improves overall energy utilization.

10. Develop a Continuous Improvement Strategy

Finally, create a framework for ongoing optimization. Start by establishing baseline performance metrics through comprehensive auditing. This foundation enables informed decision-making and provides measurable benchmarks.

Prioritize your efforts based on potential impact and implementation feasibility. Airflow management and virtualization typically offer substantial benefits with modest investments, making them excellent starting points.

Don’t set it and forget it. Regular reassessment ensures continued optimization as technologies and requirements evolve. The most successful efficiency programs establish continuous improvement cycles.

Foster a culture of efficiency throughout your organization. From C-suite support to frontline implementation, energy efficiency must become part of your operational DNA. Regular training, shared metrics, and recognition for improvement ideas all help embed efficiency into your organizational culture.

Stay current with industry developments. Attend conferences, participate in benchmarking studies, and engage with organizations like The Green Grid to learn about emerging technologies and best practices. What was cutting-edge yesterday may be standard practice tomorrow.

11. Choose the Right Energy Campus Development Partner

As data center power demands continue to surge, selecting the right energy campus development partner has become as critical as any infrastructure decision you’ll make. The most efficient data centers start with strategic site selection and comprehensive energy planning that traditional real estate developers simply cannot provide.

Energy campus developers specialize in identifying optimal locations based on renewable energy potential, transmission access, and utility partnerships—not just proximity to metropolitan areas. They handle the complex process of land acquisition, zoning approvals, utility coordination, and renewable energy integration that can take years to navigate independently.

Key capabilities to evaluate in energy campus partners include proven experience with gigawatt-scale renewable energy development, established relationships with utility providers, and comprehensive site preparation services that extend beyond basic real estate development. The best partners offer end-to-end solutions that include fiber connectivity, water resources, natural gas access where applicable, and integrated renewable energy systems.

Look for developers with strong financial backing and a track record of successful project completion. Energy campus development requires substantial upfront investment and long-term commitment—partnerships with undercapitalized developers can result in project delays, cost overruns, or incomplete infrastructure that compromises your facility’s efficiency goals.

The most strategic energy campus partnerships also provide ongoing operational support and expansion capabilities. As your computing demands grow, your energy campus partner should be positioned to scale renewable generation and infrastructure capacity seamlessly, enabling rapid deployment of additional facilities without starting the site development process from scratch.

Taking the Next Step in Data Center Energy Efficiency

Implementing these data center energy efficiency best practices requires a systematic approach and expertise. The payoff is substantial: reduced operational costs, minimized environmental impact, and improved performance.

However, the most impactful efficiency gains often start before the first server is installed. Forward-thinking organizations are recognizing that energy efficiency begins with strategic site selection and comprehensive energy campus development that integrates renewable generation, optimized infrastructure, and scalable power delivery from day one.

Think of efficiency as a journey rather than a destination. Technology continues to evolve, offering new opportunities for optimization. But the organizations achieving the most dramatic efficiency improvements are those partnering with specialized energy campus developers who can deliver turnkey renewable energy solutions and purpose-built infrastructure designed for optimal performance.

For organizations seeking expert guidance in developing energy-efficient data center campuses from the ground up, 174 Power Global offers specialized solutions for comprehensive energy campus development that integrates renewable energy generation, strategic site selection, and scalable power infrastructure. With extensive experience in gigawatt-scale renewable energy projects and deep expertise in data center power requirements, our team helps hyperscalers and data center developers achieve ambitious efficiency goals while reducing operational costs and environmental impact through purpose-built energy campuses designed for long-term scalability. Contact us to learn how a customized energy campus approach can deliver superior efficiency and performance for your next data center development.