The rise of generative AI and machine learning at scale is forcing data center architects to reimagine everything from the ground up. Traditional designs built around CPU-based workloads can’t support the high-density, high-throughput demands of AI infrastructure. As a result, AI data center design is becoming a specialized discipline that must solve for unprecedented thermal loads, electrical consumption, and sustainability challenges in tandem.

Energy demands from data centers could be as high as 219 gigawatts (GW) by 2030, skyrocketing up from the current demand of 60 GW. These figures call for innovative approaches to data center design, emphasizing the integration of renewable energy sources and advanced cooling solutions to meet the escalating demands of AI workloads.

Why AI Data Center Design Is Unlike Anything Before

As AI applications like natural language processing, computer vision, and generative models scale in complexity, they demand far more from physical infrastructure than legacy IT systems ever did. Unlike traditional workloads, which are relatively lightweight and intermittent, AI training and inference require continuous, high-throughput processing with immense power and cooling needs. This energy consumption has created a watershed moment in AI data center design where every decision, from rack configuration to energy sourcing, must be reengineered to meet new realities.

From CPU to GPU: What This Shift Means for Physical Infrastructure

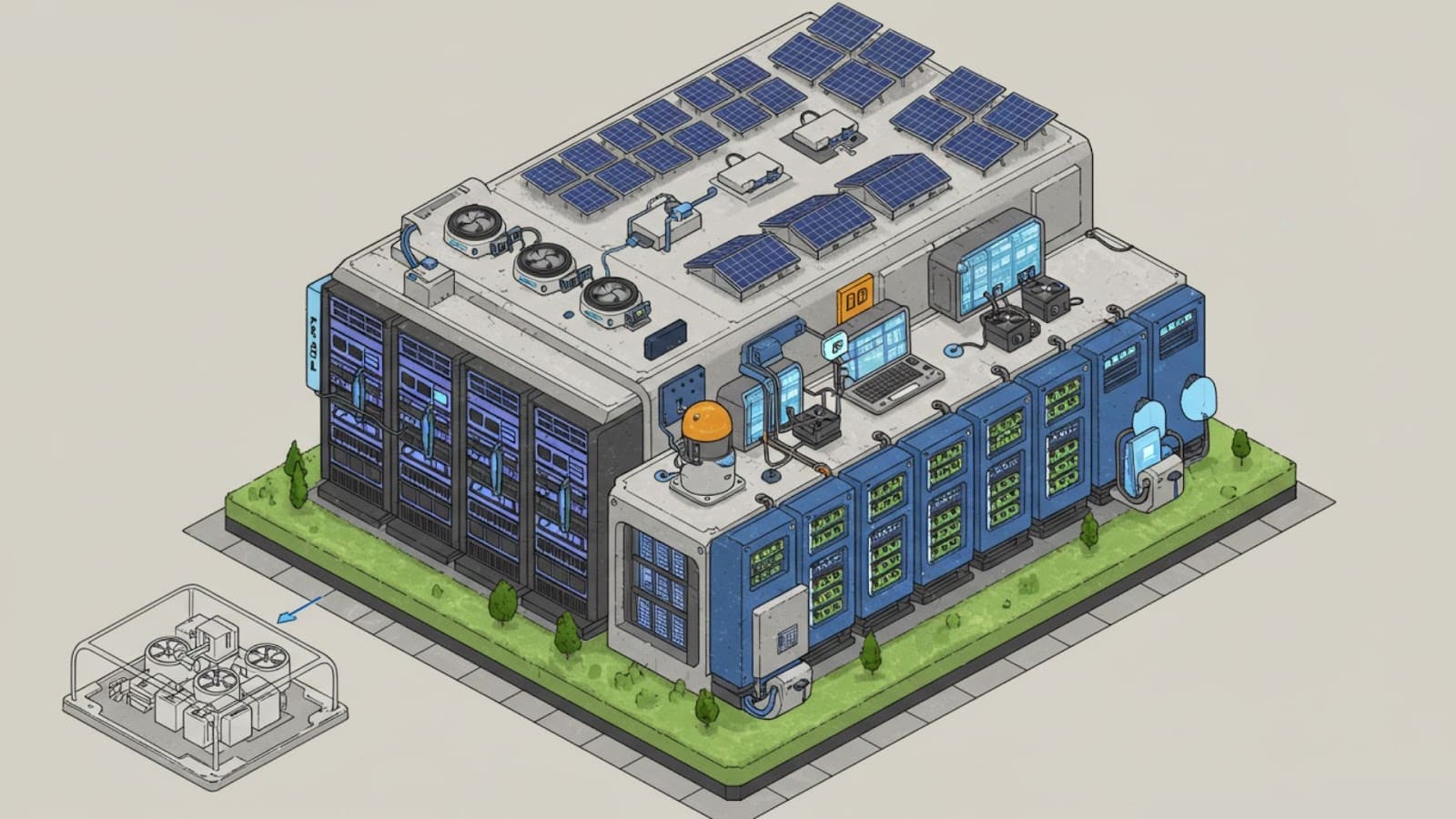

The migration from CPU-based compute to GPU-intensive workloads is perhaps the most fundamental shift driving change in data center design. GPUs, particularly those built for parallel processing in AI training, generate significantly more heat and consume more power per square foot than traditional server configurations. As a result, the physical layout of data centers is being transformed to accommodate denser, vertically stacked servers and high-speed interconnects that can efficiently move massive data volumes between processors.

This change impacts structural load, cabling complexity, and overall floor planning. Facilities now require reinforced floors, elevated ceilings, and greater spacing allowances to facilitate specialized cooling systems. In some cases, operators are moving toward custom-built pods or modular units designed exclusively for AI workloads.

Why Energy Density and Thermal Loads Are Now the Top Challenges

AI servers can demand up to 10x more power than conventional servers, pushing rack densities from 5–10 kW to 40 kW or more. This demand intensifies the thermal footprint of each rack and makes managing heat a mission-critical engineering challenge. Failure to control thermal loads can lead to performance throttling, equipment damage, and downtime, which are all unacceptable in high-availability environments.

Cooling infrastructure must now match the intensity of the workloads it supports. Traditional air conditioning systems are rapidly being replaced or augmented by direct-to-chip liquid cooling, immersion tanks, and hot aisle containment systems that isolate and extract heat with surgical precision. Engineers must balance energy consumption from these systems with overall data center efficiency, ensuring the Power Usage Effectiveness (PUE) doesn’t spiral out of control.

The Sustainability Dilemma: Scaling AI Without Carbon Surge

AI’s growth brings not only infrastructure challenges but also ethical and environmental scrutiny. Each massive AI model trained today emits tens to hundreds of tons of CO₂ unless powered by clean energy. With AI data centers quickly becoming some of the largest energy consumers in the tech sector, sustainability can no longer be an afterthought.

These energy insights are prompting a new wave of demand for facilities powered by renewable sources like solar and green hydrogen, paired with advanced battery storage systems to ensure reliability. Strategic site selection has also become a sustainability issue. Operators seek proximity to low-carbon grids and favorable environmental conditions to offset energy loads. Balancing performance, uptime, and carbon neutrality is fast becoming the new benchmark for next-generation data center success.

Engineering for GPU Density: Power, Space, and Cooling Considerations

The growing demand for GPU-accelerated computing has made GPU density a defining parameter in modern AI data center design. As facilities push the limits of how many GPUs can be packed into each rack, new engineering challenges around power delivery, heat dissipation, and physical access are emerging.

Higher Rack Power and Specialized Cooling Solutions

Power densities exceeding 30–40 kW per rack are becoming commonplace, and some AI-specific deployments even reach beyond 50 kW. Data centers are shifting away from traditional raised-floor designs toward liquid cooling systems, which can extract more heat using less space and energy. Technologies such as direct-to-chip cooling, rear door heat exchangers, and full immersion cooling are now entering mainstream deployments.

In response, energy providers and data center developers are designing facilities with reinforced electrical distribution systems, high-capacity PDUs, and integrated cooling zones to handle the load. These advanced designs ensure GPU-dense racks can operate without throttling, maximizing both performance and ROI.

Balancing Compactness with Serviceability

With racks more tightly packed, maintenance access has become a critical issue. Technicians need to access hot-swappable components without disturbing neighboring systems, especially in high-density environments where a single downtime incident can disrupt multiple workloads.

AI data centers are incorporating modular rack layouts, wider aisles, and robotic monitoring tools that reduce the need for manual intervention. Some hyperscalers are also experimenting with vertically segmented zones that isolate AI workloads from other compute tasks, improving airflow and minimizing risk during upgrades or maintenance windows.

The Role of Modular Builds in Meeting AI Demands

Modular design strategies, such as prefabricated containerized units or pod-based layouts, offer a flexible approach to quickly scaling GPU density without compromising performance. These modular solutions allow for phased deployment and rapid expansion while optimizing for thermal zoning and airflow separation between hot and cold corridors.

Moreover, modular builds make it easier to align infrastructure growth with AI demand cycles, avoiding overprovisioning and reducing both capital and carbon costs.

Rethinking Airflow: Cooling Strategies That Can Keep Up

In high-performance AI environments, airflow management becomes an art form. It’s about directing, isolating, and accelerating air with maximum efficiency. As rack power consumption rises, traditional HVAC systems are giving way to dynamic, sensor-driven cooling architectures.

Hot Aisle Containment vs. Liquid Cooling Systems

Many facilities still use hot aisle containment to funnel heat away from servers and into targeted exhaust systems. This approach separates cold intake air from hot exhaust, maintaining stable internal temperatures. However, as rack power surges past 30 kW, air-based cooling starts to hit physical limits.

Liquid cooling, both direct-to-chip and immersion-based, is the go-to solution for extreme GPU configurations. It reduces reliance on airflow altogether and enables significantly tighter equipment layouts without thermal compromise. Choosing between the two often depends on the AI workload’s heat output and the site’s energy infrastructure.

Predictive AI Models for Dynamic Thermal Management

AI is now helping optimize its own operating environment. Predictive thermal models powered by machine learning can monitor GPU temperatures in real time, detect anomalies, and adjust fan speeds, valve positions, or fluid flows before hot spots form. Automation reduces energy waste and extends hardware lifespan.

Leading-edge facilities use digital twins (virtual models of their cooling systems) to simulate how changes in GPU density or workload intensity will affect airflow. Teams can preemptively design for failure modes and optimize energy use with precision.

How Power Providers Can Enable Next-Gen Cooling

Advanced cooling strategies require coordination with the facility’s energy systems. Cooling loops, pumps, chillers, and smart fans must be backed by a reliable, scalable power supply with embedded monitoring.

Developers who co-engineer power and cooling systems, integrating them with renewable sources and battery energy storage, can help ensure uninterrupted operation and dramatically reduce the site’s PUE. Integration is especially important in AI data center design, where thermal volatility is a constant threat to uptime.

Renewable Infrastructure as a Foundation for AI Growth

Building sustainable AI data center design future-proofs business models in a carbon-conscious world. The industry’s energy appetite is skyrocketing, and companies that can’t secure clean, reliable power may fall behind on both performance and ESG goals.

Why Traditional Energy Sources Can’t Keep Up

Fossil-fueled grids are under increasing pressure from regulators and market volatility. As AI pushes demand into gigawatt territory, power reliability becomes critical. Unfortunately, legacy energy infrastructure wasn’t built to handle this level of sustained draw, especially in rural or industrial zones.

Data center developers are looking for alternative energy strategies that can scale with demand. Renewable-first infrastructure, including solar, wind, and energy storage, is environmentally sound and economically strategic.

Integrating AI Data Centers with Solar, Storage, and Hydrogen

Some of the most forward-looking data center campuses are pairing AI facilities with onsite solar farms, battery storage, and green hydrogen generation. These distributed systems provide consistent, carbon-free power that can be scaled in sync with GPU deployment.

Battery Energy Storage Systems (BESS), in particular, offer load balancing capabilities that help avoid peak pricing while supporting backup needs. For facilities in remote or high-sun areas, on-campus solar can cover a significant portion of daytime energy demand, drastically cutting emissions and operating costs.

The Rise of Energy Campuses: Building with Sustainability in Mind

To streamline all these systems, developers are building dedicated energy campuses. These massive land parcels are engineered for renewable energy integration, data center scale, and rapid deployment. Strategically located near grid interconnects and designed with AI workloads in mind, these sites offer a one-stop ecosystem of power, space, and sustainability.

Energy campuses offer a compelling vision of the future where AI data center design adapts to energy constraints and helps lead the transition to a cleaner, smarter grid.

Designing for the Future: Agility, Reliability, and Zero Downtime

As AI-driven services become mission-critical across industries, reliability is an imperative. Modern AI data centers must operate at hyperscale performance while delivering near-zero downtime, even in the face of climate, cyber, and supply chain risks.

Adaptive Grid Interconnection Planning

Securing a high-capacity, low-latency grid connection is one of the biggest hurdles for new data center developments. AI facilities can require hundreds of megawatts, and legacy grids are rarely equipped to handle that without years of upgrades.

Developers now work backwards from known grid constraints, engaging in multi-year permitting and engineering processes that align site planning with utility availability. Smart interconnection strategies, like looped feeds or on-site substations, help ensure long-term scalability and uptime.

Battery Energy Storage Systems for Resiliency

In AI facilities where even a few seconds of downtime can corrupt datasets or crash models, BESS provides instantaneous switchover during power loss and supports demand-side optimization during peak load windows.

Unlike diesel generators, BESS solutions are fast, clean, and increasingly affordable. They also integrate seamlessly with solar and hydrogen systems, creating a fully renewable backup solution with minimal environmental impact.

Co-Developing Power Solutions with AI Providers

Rather than treating energy as a utility service, many leading AI firms view it as a strategic partnership. Energy developers are working hand-in-hand with hyperscalers to design power solutions based on predicted GPU growth curves, rack densities, and cooling profiles.

Co-development enables faster deployment, lower costs, and deeper alignment on ESG goals. It also builds trust, which is essential when reliability and transparency define long-term success in AI data center design.

The Future of Data Center Design Starts with Smarter Energy

The transformation underway in AI data center design is now foundational. From GPU density and airflow engineering to sustainable power sourcing, every decision must support a future defined by performance, flexibility, and responsibility. As demand rises, the energy infrastructure behind these facilities will determine their uptime and viability.174 Power Global, backed by the Hanwha Group, brings decades of experience in utility-scale renewable energy development to the data center industry. Our California-based team specializes in gigawatt-scale energy solutions, combining solar power, energy storage, and green hydrogen systems with strategic land acquisition and high-capacity grid interconnections. We handle the complete development cycle from site selection and permitting to power delivery, creating renewable-powered energy campuses designed specifically for hyperscale AI infrastructure. Contact us to power your next-generation data center with proven expertise and financial strength.