AI workloads demand unprecedented power densities and reliability, with rack requirements jumping from 8kW to 30kW+ and total data center power demand projected to grow 165% by 2030.

- Power requirements for AI data centers include GPU clusters consuming 700W-1200W per chip and continuous 24/7 operations

- AI energy scaling requires hybrid renewable systems, advanced storage, and redundant backup infrastructure

- AI data center design must integrate on-site generation, liquid cooling, and modular power distribution for maximum resilience

- Strategic recommendation: Partner with energy campus developers who can deliver integrated power solutions from land acquisition through renewable generation

Artificial intelligence is reshaping the digital economy, but behind every breakthrough lies a fundamental challenge that could determine which organizations succeed in the AI revolution. The power requirements for AI data centers have exploded beyond anything the industry has previously encountered, creating an urgent need for entirely new approaches to energy infrastructure design and delivery.

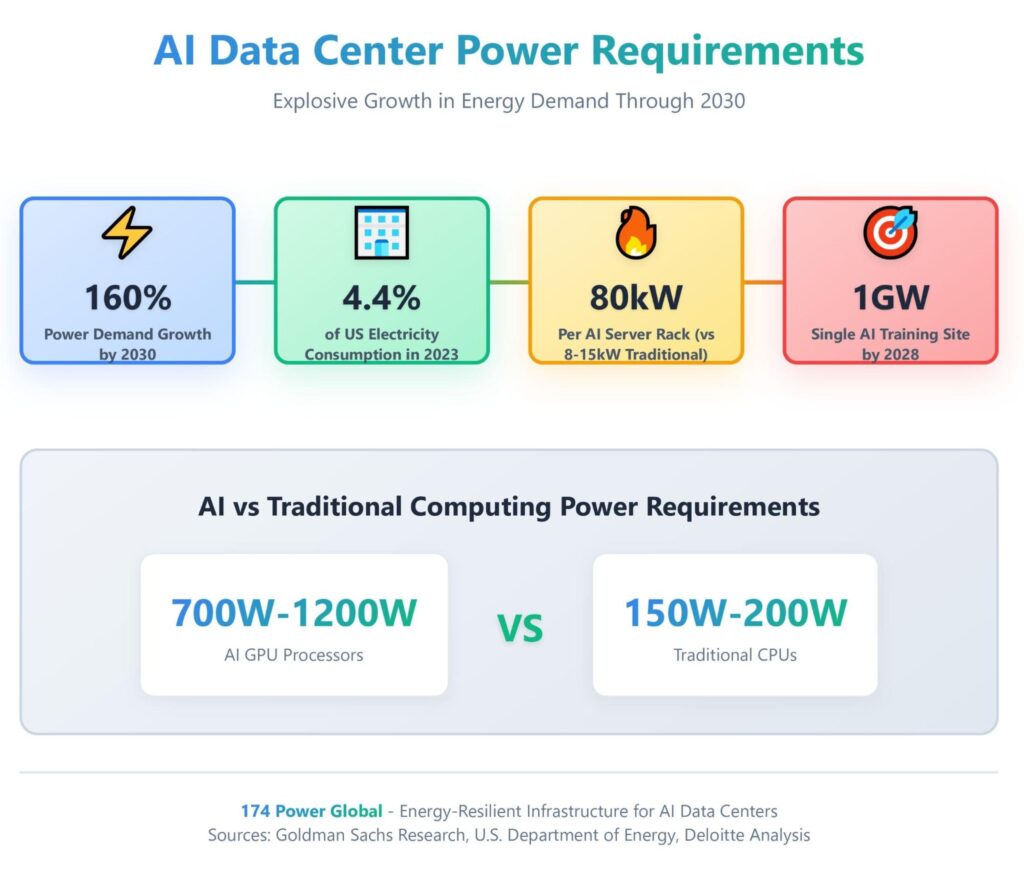

According to Goldman Sachs Research, data center power demand will grow 160% by 2030, with AI workloads driving the majority of this unprecedented surge. The U.S. Department of Energy reports that data center load growth has tripled over the past decade and is projected to double or triple again by 2028. Unlike traditional computing, AI operations require sustained high-power consumption, with modern GPU clusters demanding 30kW per rack compared to just 8kW for conventional servers. This isn’t simply a scaling challenge—it represents a fundamental shift requiring energy-resilient infrastructure designed from the ground up for AI’s unique demands.

The consequences of inadequate power planning are severe. Data centers experiencing power failures can face losses exceeding $1 million per hour, while insufficient capacity blocks AI deployment entirely. Organizations that fail to secure reliable, scalable power infrastructure will find themselves unable to capitalize on AI opportunities, regardless of their computational expertise or data resources.

Understanding Power Requirements for AI Data Centers

AI workloads consume dramatically more energy than traditional computing tasks, creating power demands that strain existing infrastructure and require comprehensive redesign of data center energy systems. The shift from CPU-based computing to GPU-intensive AI processing has fundamentally altered the energy landscape for digital infrastructure.

Modern AI chips consume between 700W to 1200W per processor, compared to 150W-200W for traditional server CPUs. When deployed in typical configurations of eight GPUs per server blade and ten blades per rack, a single AI rack can demand up to 80kW of sustained power. Training large language models or running inference workloads generates these power requirements continuously, unlike traditional computing that experiences significant load variations throughout the day.

The sustained nature of AI power consumption creates unique challenges for energy planning. MIT research shows that processing a million tokens generates carbon emissions equivalent to driving a gas-powered vehicle 5-20 miles, while creating an AI-generated image uses energy equivalent to fully charging a smartphone. According to the International Energy Agency, global data centers currently account for around 1% of electricity consumption, but this could increase significantly as AI adoption accelerates.

AI energy scaling becomes more complex when considering model training requirements. Training sessions often run for weeks or months, requiring thousands of synchronized GPUs operating at near-maximum capacity. This creates power demands that must be sustained over extended periods without interruption, as training failures can result in significant time and resource losses.

Peak power demands during AI training can exceed 1 GW for a single location by 2028, according to RAND Corporation analysis. This level of power consumption equals that of eight nuclear reactors, highlighting the scale of infrastructure required to support next-generation AI capabilities.

The unpredictable nature of AI workloads adds another layer of complexity. Unlike traditional enterprise computing with predictable daily and seasonal patterns, AI operations can spike unexpectedly as models encounter complex problems or as new training datasets become available. This variability requires power infrastructure designed for maximum sustained load rather than average consumption patterns.

Core Components of Energy-Resilient Design

Energy-resilient AI data center design requires multiple integrated systems working together to ensure continuous power availability under all operating conditions. These components must function seamlessly during normal operations while providing robust backup capabilities during grid disturbances or equipment failures.

Primary power infrastructure forms the foundation of resilient design. This includes high-capacity transformers rated for sustained maximum load, redundant utility connections from separate substations when possible, and power distribution systems designed for the high current demands of AI workloads. AI facilities typically operate at much higher capacity utilization rates than traditional data centers, requiring infrastructure designed for sustained peak operation.

Redundancy systems provide critical backup capabilities. N+1 configurations ensure that if any single power component fails, backup systems immediately assume the load without interrupting operations. For mission-critical AI applications, some operators implement 2N redundancy, where complete duplicate power systems run in parallel. This approach doubles infrastructure costs but eliminates single points of failure.

Power quality management becomes essential when supporting sensitive AI hardware. Voltage fluctuations, frequency variations, or harmonic distortion can cause GPU failures or training interruptions that result in significant time and cost losses. Advanced power conditioning systems, uninterruptible power supplies (UPS), and surge protection equipment maintain clean, stable power delivery regardless of grid conditions.

Monitoring and control systems provide real-time visibility into power consumption, system health, and performance metrics. Modern AI data centers deploy intelligent power management software that can automatically shed non-critical loads during emergencies, optimize power distribution based on workload requirements, and predict potential failures before they occur.

Advanced Power Infrastructure Solutions

Modern AI data center design leverages innovative power infrastructure technologies that go beyond traditional approaches to meet the unique demands of high-performance computing workloads. These solutions address both the scale and reliability requirements of AI operations while supporting sustainability objectives.

Modular power distribution systems enable rapid deployment and flexible reconfiguration as AI workloads evolve. Prefabricated switchgear, transformer modules, and distribution units can be deployed quickly and reconfigured as power requirements change. This modularity proves particularly valuable for AI environments where computing configurations may change significantly as new hardware becomes available.

High-voltage power distribution reduces transmission losses and improves efficiency for large-scale AI facilities. Advanced power delivery systems, including higher-voltage server power supplies, help minimize energy losses throughout the distribution chain. Medium-voltage distribution loops create redundant power paths while minimizing the electrical infrastructure footprint.

Dynamic load management systems automatically adjust power allocation based on real-time AI workload requirements. These systems can shift power between different computing clusters, reduce non-essential loads during peak demands, and coordinate with grid operators to provide demand response capabilities. Advanced implementations can even migrate workloads between facilities to optimize power utilization across multiple sites.

Microgrid capabilities allow AI data centers to operate independently from the main electrical grid during emergencies or maintenance periods. Microgrids combine on-site generation, energy storage, and intelligent control systems to create self-sufficient power networks. During normal operations, microgrids can provide grid services such as frequency regulation and voltage support while reducing dependence on utility power.

Direct current (DC) power distribution eliminates multiple AC-DC conversions, improving overall system efficiency. DC systems work particularly well with renewable energy sources and battery storage systems, which naturally operate in DC mode. Some advanced AI facilities implement DC microgrids that connect solar arrays, batteries, and computing equipment directly without conversion losses.

Meeting Power Requirements for AI Data Centers Through Renewable Integration

Renewable energy integration has evolved from an environmental consideration to a business necessity for AI data center operations. The scale of power requirements for AI data centers makes renewable energy both financially attractive and operationally essential for long-term sustainability and cost control.

On-site solar generation provides the most direct approach to renewable energy integration. Large-scale solar arrays can be co-located with AI data centers, providing clean power during daylight hours while reducing grid dependence. Advanced implementations combine solar generation with battery storage systems to extend renewable power availability beyond daylight hours.

Battery energy storage systems (BESS) bridge the gap between intermittent renewable generation and continuous AI power demands. Modern lithium-ion systems can store excess solar energy during peak generation periods and discharge during evening hours or cloudy conditions. BESS also provides grid services such as frequency regulation and voltage support, creating additional revenue streams while supporting renewable integration.

Power purchase agreements (PPAs) enable AI operators to secure renewable energy from off-site projects when on-site generation isn’t practical. Virtual PPAs allow organizations to support renewable development while receiving renewable energy certificates that offset their carbon footprint. These long-term contracts provide cost predictability while supporting the development of new renewable energy projects.

Hybrid renewable systems combine multiple clean energy sources to maximize availability and reliability. Wind and solar generation often complement each other, with wind resources frequently strongest during evening hours when solar generation decreases. Adding green hydrogen production creates long-term energy storage that can provide carbon-free backup power during extended periods of low renewable generation.

Grid integration services allow AI data centers to participate in renewable energy markets while supporting grid stability. Demand response programs compensate facilities for reducing power consumption during peak grid stress periods. Frequency regulation services use AI data center power systems to help maintain grid stability, creating revenue opportunities while supporting renewable energy integration.

Backup Power and Redundancy Systems

Backup power systems for AI data centers must provide immediate response to power interruptions while supporting sustained operations during extended outages. The high power densities and continuous nature of AI workloads create unique requirements that traditional backup systems struggle to meet.

Uninterruptible power supply (UPS) systems provide instantaneous backup power during the transition from utility power to backup generators. Modern UPS systems for AI applications use lithium-ion batteries that offer faster charging, longer life, and higher power density compared to traditional lead-acid systems. These advanced systems can support AI rack loads exceeding 80kW while maintaining runtime sufficient for generator startup.

Generator systems provide extended backup power capability during prolonged outages. AI data centers typically deploy multiple generators in N+1 or 2N configurations to ensure redundancy. Natural gas generators offer advantages over diesel systems, including cleaner emissions, unlimited fuel supply through utility connections, and reduced maintenance requirements. Some facilities implement dual-fuel systems capable of operating on natural gas or hydrogen for maximum flexibility.

Energy storage alternatives to traditional generators are gaining adoption for their environmental benefits and operational advantages. Large-scale battery systems can provide backup power without emissions, noise, or fuel requirements. Battery systems typically feature faster response times than generators and can provide grid services during normal operations to offset their cost.

Redundant utility connections eliminate single points of failure in primary power supply. AI data centers in major markets often connect to multiple utility substations fed from different transmission sources. Automatic transfer systems detect utility failures and switch to backup connections within milliseconds, preventing any interruption to AI operations.

Fuel cell systems provide an emerging backup power option that combines the environmental benefits of renewable energy with the reliability of on-site generation. Hydrogen fuel cells can operate continuously as long as fuel is available and produce only water vapor as a byproduct. Integration with on-site hydrogen production using renewable energy creates a completely carbon-free backup power system.

AI Energy Scaling Considerations

AI energy scaling requires infrastructure designed for exponential growth rather than linear expansion. Traditional data center planning assumes gradual capacity increases, but AI deployment often involves rapid scaling that can double or triple power requirements within months.

Horizontal scaling distributes AI workloads across multiple facilities to manage power requirements and improve resilience. Rather than concentrating all AI infrastructure in a single massive facility, operators deploy smaller, specialized sites that can be powered by local renewable resources. This approach reduces transmission constraints while improving fault tolerance through geographic diversity.

Modular infrastructure design enables rapid capacity expansion without major construction projects. Prefabricated power modules, cooling systems, and IT equipment can be deployed quickly as AI power requirements grow. Modular systems also provide flexibility to upgrade individual components as technology advances without disrupting existing operations.

Edge deployment strategies position AI computing closer to end users to reduce latency while distributing power requirements across multiple smaller facilities. Edge AI data centers typically operate at 1-10MW scale compared to 50-100MW+ for hyperscale facilities, making them easier to power using local renewable resources and distributed backup systems.

Efficiency optimization becomes critical as AI power requirements scale. Advanced workload management software can optimize AI model placement based on power availability, renewable energy generation, and cooling capacity. These systems can migrate workloads between facilities to balance load and maximize renewable energy utilization.

Future technology planning must account for evolving AI hardware and software requirements. Next-generation AI chips promise improved efficiency but may require different power delivery characteristics. Quantum computing integration could create entirely new power and cooling requirements. Infrastructure must be designed with sufficient flexibility to accommodate these emerging technologies.

Best Practices for AI Data Center Design

Implementing energy-resilient AI data center design requires following proven best practices that address the unique challenges of high-power, continuous-operation computing environments. These practices encompass planning, implementation, and ongoing optimization strategies.

Strategic site selection prioritizes power availability over traditional location factors. Modern AI data center site selection evaluates utility capacity, renewable energy resources, transmission access, and regulatory environment before considering proximity to population centers. Sites with abundant solar or wind resources, supportive utility partnerships, and streamlined permitting processes provide significant advantages for AI deployment.

Power-first design methodology sizes all infrastructure based on maximum sustained AI workload requirements rather than average consumption. This approach ensures adequate capacity for peak training operations while providing headroom for future growth. Understanding power requirements for AI data centers helps inform decisions about power distribution systems, cooling infrastructure, and backup systems that can all support continuous maximum load operation.

Integrated cooling solutions combine multiple cooling technologies to handle the extreme heat generation of AI hardware. Liquid cooling systems directly cool GPU processors while precision air conditioning manages ambient temperatures. Some facilities implement immersion cooling for maximum efficiency, submerging entire servers in dielectric fluids for superior heat removal.

Advanced monitoring implementation deploys comprehensive sensors and control systems throughout the facility to track power consumption, temperature, humidity, and equipment health in real-time. Machine learning algorithms analyze this data to predict potential failures, optimize energy usage, and automatically adjust systems for maximum efficiency.

Flexible infrastructure deployment uses modular components that can be reconfigured as AI requirements evolve. Raised floor systems with removable panels, cable management systems that support rapid changes, and modular power distribution enable quick adaptation to new hardware configurations without major construction.

Sustainability integration incorporates renewable energy, energy storage, and efficiency measures from the initial design phase rather than retrofitting these capabilities later. On-site solar arrays, battery storage systems, and grid interconnection capabilities are planned as integral infrastructure components rather than additions.

Redundancy optimization balances reliability requirements with cost considerations by implementing N+1 redundancy for critical systems while avoiding over-provisioning non-essential components. Critical AI workloads receive full redundant power paths while support systems may operate with lower redundancy levels.

Commissioning and testing protocols validate all systems under full load conditions before placing AI workloads into production. This includes testing backup power systems, failover procedures, and cooling performance under maximum heat load conditions to ensure reliable operation when AI systems are deployed.

Future-Proofing Your Energy Infrastructure

Future-proofing energy infrastructure for AI data centers requires anticipating technological evolution while building flexibility into current designs. The rapid pace of AI development means infrastructure decisions made today must support unknown technologies and applications that will emerge over the next decade.

Technology roadmap planning evaluates emerging AI hardware trends to inform infrastructure decisions. Quantum computing integration, neuromorphic processors, and advanced AI accelerators may require different power delivery characteristics than current GPU-based systems. Infrastructure designs should include sufficient flexibility to accommodate these emerging technologies without major reconstruction.

Grid modernization integration positions AI data centers to benefit from smart grid capabilities as they become available. Vehicle-to-grid connections, dynamic pricing systems, and automated demand response programs will create new opportunities for AI facilities to optimize their energy usage while supporting grid stability.

Regulatory compliance preparation addresses evolving environmental and safety requirements for high-power facilities. Carbon reporting requirements, renewable energy mandates, and efficiency standards are becoming more stringent across major markets. Infrastructure designs should exceed current requirements to avoid costly retrofits as regulations evolve.

Expandability planning reserves space and capacity for future power infrastructure additions. This includes oversized electrical rooms, spare conduit capacity for new circuits, and structural provisions for additional cooling equipment. The cost of planning for future expansion during initial construction is minimal compared to retrofitting occupied facilities.

Partnership strategies align with energy companies that demonstrate long-term commitment to AI infrastructure development. The most effective partnerships combine renewable energy development expertise with deep understanding of AI power requirements, enabling integrated solutions that address both current needs and future growth.

FAQ Section

What are the typical power requirements for AI data centers compared to traditional facilities?

AI data centers require 30-80kW per rack compared to 8-15kW for traditional data centers. Modern AI chips consume 700W-1200W each, with typical configurations using 8 GPUs per server. A fully loaded AI rack can demand as much power as 20-30 traditional server racks.

How do backup power systems differ for AI workloads?

AI backup systems must support much higher power densities and longer runtime requirements. UPS systems need sufficient capacity for 80kW+ racks, while generators must provide sustained power for extended training operations that can run for weeks. Battery storage systems are increasingly preferred for their faster response times and environmental benefits.

What renewable energy options work best for AI data centers?

Solar combined with battery storage provides the most practical renewable solution for AI facilities. On-site solar arrays can provide daytime power while battery systems store excess energy for night operations. Power purchase agreements (PPAs) offer alternatives when on-site generation isn’t feasible.

How important is cooling in AI data center power planning?

Cooling typically accounts for 35-40% of total power consumption in AI data centers. The extreme heat generation from high-density GPU clusters requires advanced cooling solutions like liquid cooling or immersion systems. Cooling power requirements must be included in total facility power planning from the initial design phase.

What are the key considerations for AI data center site selection?

Power availability is the primary factor, followed by access to renewable energy resources, utility partnerships, and transmission capacity. Traditional factors like proximity to population centers are secondary to ensuring adequate power infrastructure and supportive regulatory environments for high-power facilities.

Powering the Future of AI Infrastructure

Meeting power requirements for AI data centers requires a fundamental shift from traditional approaches to infrastructure design and energy procurement. The unprecedented power demands of AI workloads, combined with the need for continuous operations and sustainability goals, demand integrated solutions that address power generation, distribution, backup systems, and cooling technologies as a cohesive ecosystem.

The organizations that will succeed in the AI revolution are those that recognize energy infrastructure as a strategic advantage rather than a utility function. By implementing comprehensive energy resilience strategies, partnering with experienced energy developers, and designing for future scalability, forward-thinking companies can ensure their AI initiatives have the reliable, sustainable power foundation necessary for long-term success.174 Power Global specializes in developing energy-resilient infrastructure solutions specifically designed for AI and high-performance computing workloads. Our integrated approach combines renewable energy development, advanced storage systems, and comprehensive power delivery to create energy campuses that support the most demanding AI applications. Contact 174 Power Global to discover how our energy infrastructure expertise can power your AI ambitions with the reliability and sustainability your organization requires.