The explosion of generative AI, large language models, and high-performance computing (HPC) has fundamentally reshaped digital infrastructure. Unlike traditional data centers, AI-powered facilities require exponentially more electricity to run massive GPU clusters, train deep learning models, and deliver real-time inference.

Electricity consumption from data centers, AI, and cryptocurrency could double by 2030, with AI being a major driver of this increase. As a result, understanding the power requirements for AI data centers is now critical for anyone involved in planning, operating, or investing in next-generation digital infrastructure.

How much power these data centers need is one thing, but the more pressing issue is how reliably, efficiently, and sustainably that power is delivered. Between compute energy and advanced cooling systems, operators must now account for enormous, continuous demand while navigating tightening emissions standards and evolving ESG expectations.

The challenge is as much about infrastructure as it is about innovation. From renewable integration to energy storage and grid access, AI data centers represent a new era of energy strategy where foresight, flexibility, and resilience are paramount.

Understanding the Power Requirements for AI Data Centers

As AI applications scale, so too does their energy appetite. From training foundation models to supporting real-time user interactions, AI data centers consume significantly more power than traditional cloud or enterprise facilities. These demands are also more variable and sustained, requiring an entirely new way of thinking about energy procurement and system design.

Why AI Workloads Demand More Energy Than Ever

AI workloads are computationally intensive by nature. Training a single large language model, such as GPT or a multimodal AI system, can consume hundreds of megawatt-hours of electricity. Unlike standard data processing tasks, these models rely on dense clusters of GPUs or TPUs operating simultaneously, sometimes for weeks at a time. This continuous high-performance computing pushes infrastructure to its limits and creates significant heat output, further driving up energy needs through cooling requirements.

According to McKinsey, data centers supporting AI could require up to 3x more power per square foot than traditional facilities, driven by the shift toward specialized hardware like GPUs and TPUs. They estimate that electricity demand from data centers in the U.S. could increase by 15 to 20 percent annually through 2030, with AI-related processing as a major contributor.

As workloads become more complex and model sizes continue to scale, power consumption is expected to grow in lockstep, making energy availability and infrastructure planning a critical part of any AI deployment strategy.

How GPU Clusters and Model Training Shape Energy Needs

Traditional CPU-based systems are no longer sufficient for the parallel processing AI requires. Instead, hyperscalers and enterprise developers deploy massive GPU clusters, often in pods that demand upwards of 80 kW per rack, more than double the density of a conventional data center. These high-density configurations necessitate equally robust power infrastructure to support startup surges, load balancing, and sustained compute over long durations.

Model training adds another layer of complexity. Because training is non-linear and can be unpredictable in energy use, operators must design infrastructure capable of dynamic scaling without compromising grid stability.

Fluctuations make energy forecasting difficult and underscore the need for integrated renewable solutions and advanced storage to buffer against spikes. Moreover, training sessions often require synchronization across thousands of GPUs, which amplifies both power draw and the consequences of any interruption.

For AI data centers, power is a core design constraint and competitive differentiator. Meeting this need effectively requires aligning technical strategy with forward-looking energy planning.

Meeting Compute Energy Demands at Scale

As AI becomes more embedded in everyday business operations, the compute energy demands of data centers are pushing beyond the limits of traditional infrastructure. Meeting these needs requires intentional design and sustainable foresight.

Designing for Continuous, Peak-Load Operations

Unlike cyclical or task-specific workloads that peak and drop, compute energy demand for AI is often constant, especially in environments running model training or inference around the clock. Facilities need to be engineered for average load and for continuous peak usage, where downtime or underperformance isn’t acceptable.

Designing infrastructure to meet this type of persistent demand means deploying redundant systems, high-capacity transformers, and power distribution units that can handle fluctuating voltage without interrupting performance. It’s also vital to ensure facilities can adapt over time as AI models and hardware continue to evolve.

Integrating Renewable Energy for Resilience and Sustainability

Reliability is non-negotiable for AI operations, but so is sustainability. As enterprises face increasing pressure to meet ESG goals, many are seeking data centers powered by renewable energy. Solar, wind, and energy storage solutions provide a cleaner, more resilient way to meet rising power requirements for AI data centers.

Advanced energy storage systems (ESS) bridge the gap between intermittent renewable generation and continuous AI workloads. By storing excess energy generated during peak solar hours and releasing it when demand spikes, ESS ensures consistent availability without relying on carbon-heavy backups.

Cooling: The Silent Power Consumer

While compute energy often gets top billing in AI infrastructure discussions, cooling is the stealth energy draw that can account for up to 40% of a data center’s total electricity use. The more advanced the processing, the hotter the hardware and the greater the need for sophisticated thermal management.

The Role of Advanced Cooling Systems in Power Strategy

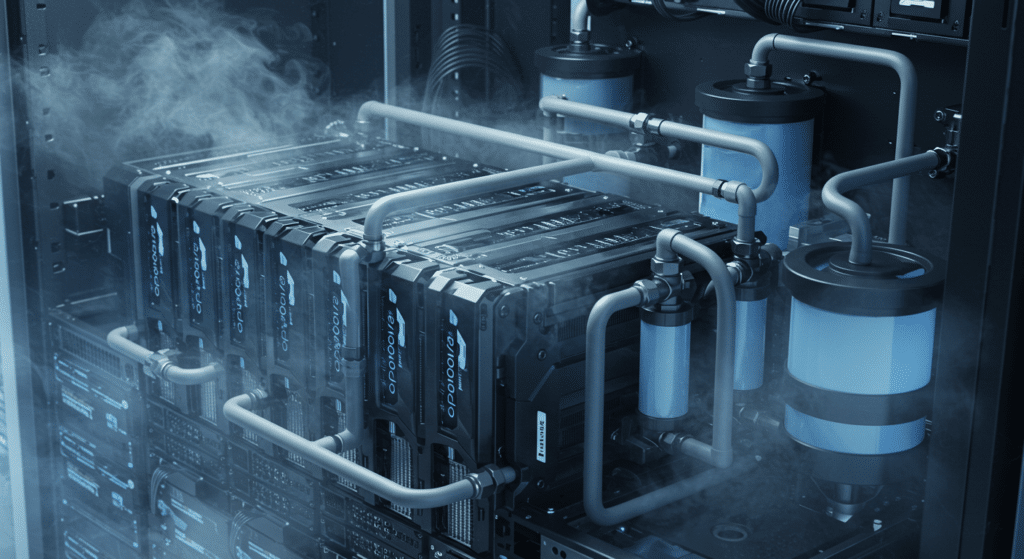

AI data centers can generate extreme heat that standard air cooling can’t handle. This shift has triggered a wave of innovation in thermal management, where precision-engineered solutions are essential to preserve uptime and performance.

Many modern AI facilities are now designed with hybrid cooling systems that combine air and liquid cooling methods. These systems offer greater efficiency and lower energy usage than legacy HVAC units, particularly when implemented alongside AI-driven controls that adjust in real time based on workload intensity and environmental variables.

Innovations in Water Usage, Liquid Cooling, and Heat Recovery

To optimize power usage effectiveness (PUE), operators are moving toward liquid immersion cooling, which directly submerges high-performance servers in thermally conductive fluids. This approach reduces cooling energy while cutting noise and infrastructure bulk.

Water usage is another critical consideration. Newer cooling systems use closed-loop water systems or minimize water consumption altogether. Some data centers are even recovering and reusing waste heat for on-site operations or district heating, an innovation that aligns well with both energy efficiency and carbon reduction goals.

Site Selection and Grid Access for Power-Intensive AI Facilities

Where a data center is built is just as important as how it’s powered. Location can determine power availability, scalability, and environmental compliance, all of which are essential when planning for massive power requirements for AI data centers.

Choosing Strategic Locations with High-Capacity Interconnections

AI data centers need to be situated near robust, high-capacity grid infrastructure to ensure reliable access to large volumes of electricity. These sites often require multiple redundant interconnects and proximity to substations that can accommodate multi-megawatt loads.

Top-tier developers look for land with existing utility easements, grid capacity, and renewable generation potential. In some cases, they also invest in on-site generation or energy campus models that colocate solar, storage, and green hydrogen to provide flexible, on-demand energy.

Preparing for Local Regulatory and Environmental Considerations

Beyond the grid, operators must navigate a complex patchwork of zoning regulations, permitting processes, and environmental compliance standards. Data center construction timelines have now extended to two to four years due to power constraints and permitting delays, and in some cases as many as six years, compared to the one to three-year timelines typical from 2015 to 2020. A 2025 industry report documented $64 billion worth of data center projects that have been blocked or delayed amid local opposition, with permitting delays identified as a key factor slowing data center expansion across the United States.

Additionally, many new data center permits now require carbon-free power sources, with the DOE implementing programs in 2024 to streamline permitting for certain larger projects to condense timelines to 24 months. Engaging local stakeholders, anticipating utility constraints, and integrating sustainable practices into site development can streamline approvals and improve community buy-in.

Energy Storage and Backup: Building for Reliability

Power reliability is mission-critical for AI operations. Any disruption, no matter how brief, can result in lost data, delayed processing, or damaged hardware. Energy storage and backup infrastructure are central to the compute energy equation.

Why Battery Storage Is Critical for AI Data Centers

Battery Energy Storage Systems (BESS) allow AI data centers to maintain operations during grid instability or outages. They also provide fast-response backup power that kicks in within milliseconds. BESS can be scaled to fit any size facility and integrate seamlessly with both grid-tied and off-grid systems.

In hybrid power environments, batteries help smooth out fluctuations from renewables, ensuring consistent voltage and load balance. Stabilization is especially important for AI tasks that can’t tolerate performance drops or rerouting delays.

Grid Independence and Redundancy in a 24/7 Compute World

Redundancy is a hallmark of AI infrastructure. Leading-edge data centers often go beyond dual feeds to incorporate fully independent microgrids or modular power islands, ensuring total autonomy in case of utility failure. These systems are often paired with renewables, storage, and advanced switchgear to enable seamless transitions and self-healing operations.

This type of grid independence supports resilience and enables operators to participate in demand response programs and grid services, turning backup infrastructure into a revenue-generating asset.

What to Consider When Planning Your Energy Infrastructure

Whether building from the ground up or retrofitting an existing facility, infrastructure planning must start with the unique energy needs of AI. That includes not just raw power but the composition, delivery, and sustainability of that energy.

Customizing Your Energy Mix Based on Use Case

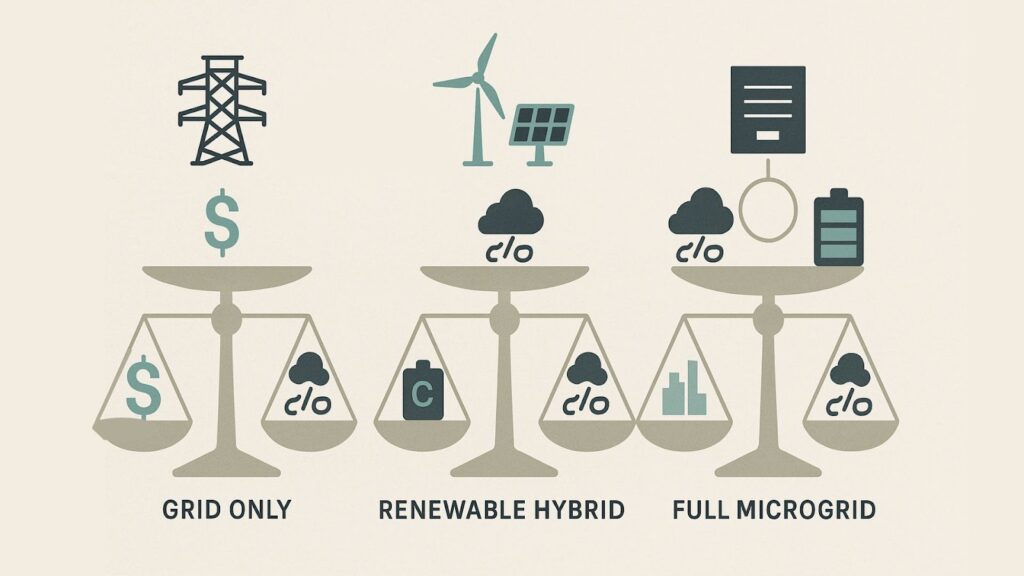

Some AI data centers focus on inference workloads with short bursts of compute, while others specialize in deep learning model training, which demands constant, high-density processing. Your energy mix, including solar, wind, hydrogen, battery, or grid, should be tailored accordingly.

The most forward-thinking operators are adopting blended strategies that combine utility power with on-site renewables, advanced storage, and natural gas as a transitional fuel. This flexibility helps ensure reliability today while building toward net-zero goals tomorrow.

Balancing Cost, Carbon, and Capacity

Every energy decision comes with tradeoffs. High-efficiency systems may reduce operating expenses but require more upfront investment. Carbon neutrality may boost brand reputation but require significant restructuring. And utility grid access may provide the most stable source of power until demand exceeds supply.

AI facility planners must consider long-term total cost of ownership (TCO), carbon accounting, and capacity for future expansion. The right partner can help balance these factors by aligning infrastructure design with business strategy, risk tolerance, and regulatory trends.

Building Smarter AI-Powered Facilities

The future of AI is quickly evolving, and energy strategy must keep pace. Innovations in renewable integration, intelligent power routing, and carbon-aware computing are transforming how data centers are built and operated.

Trends in AI, Automation, and Renewable-First Design

From predictive maintenance to AI-driven energy optimization, automation is reshaping data center operations. Renewable-first design, where energy generation is planned in parallel with compute deployment, is becoming the norm, especially for hyperscale developers with sustainability mandates.

Some innovators are even piloting AI models to manage their own power usage, dynamically shifting workloads based on real-time availability of solar or wind energy. These trends signal a future where intelligence and infrastructure co-evolve, optimizing for both performance and sustainability.

Why Scalability and Sustainability Must Coexist

The tension between growth and environmental responsibility is a defining challenge of the AI era. As power requirements for AI data centers soar, so too does the need for low-carbon, high-capacity solutions that scale alongside technology.

Sustainability and scalability are strategic imperatives. Data centers that succeed in the next decade will be those that treat power as a platform for innovation, resilience, and long-term value.

Future-Proof Your AI Data Center with Smarter Power Solutions

As AI continues to push the boundaries of what’s possible, data center infrastructure must evolve just as boldly. Meeting the power requirements for AI data centers delivers smarter, cleaner, and more resilient energy at scale. From managing compute energy and cooling demands to building in redundancy and renewable integration, success depends on a forward-thinking energy strategy.At 174 Power Global, our deep expertise in utility-scale solar, energy storage, and grid interconnection enables us to develop energy solutions tailored to the unique needs of AI data centers. We bring speed, flexibility, and sustainable innovation to every project because we know that when the power is right, everything else performs better. Get in touch with us to start a smarter conversation.